Is there a jira running where we can collate all this and have a vote?

Welcome to the Second Life Forums Archive

These forums are CLOSED. Please visit the new forums HERE

Small oblongs broken ??? |

|

|

Gaia Clary

mesh weaver

Join date: 30 May 2007

Posts: 884

|

05-13-2009 05:38

http://jira.secondlife.com/browse/VWR-8551 |

|

Omei Turnbull

Registered User

Join date: 19 Jun 2005

Posts: 577

|

05-13-2009 11:15

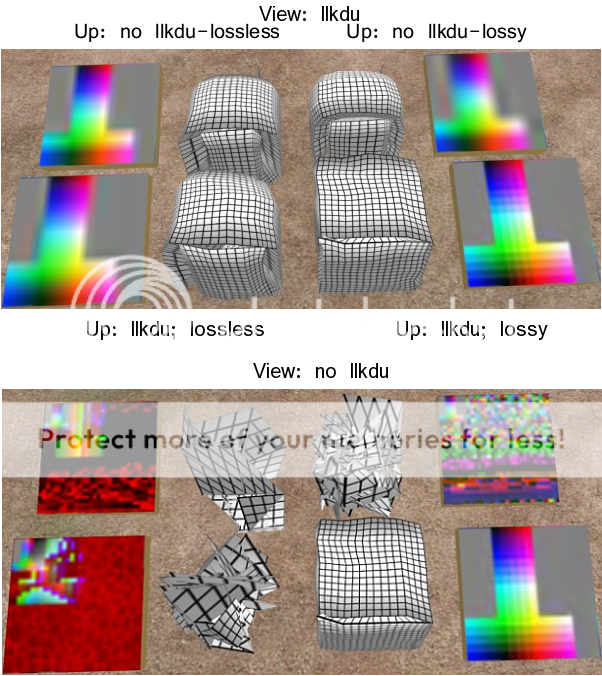

It could also be the case, as formerly, that SL isn't configured to handle images of such sizes yet (this isn't the lossless bug). In this case nothing one can do would give perfect results until this underlying bug is fixed. I do agree that what we are seeing seems to be the result of truncation of the compressed image data. I've done some experiments using libopenmv to download the compressed images. When downloading those compressed with Kakadu (i.e. the vanilla client), the server tells the client the file is one size, but then sends the client fewer bytes. (The specific numbers vary with the image but are always the same for a given image.) I've also verified that the Kakadu binaries you can get at www.kakadusoftware.com compress and uncompress these small textures with no apparent problems. My interpretation of all this is that something in the client code that is not shared between the Kakadu and OpenJPEG paths is resulting in inconsistent information being sent to the server. Instead of validating and rejecting the upload as inconsistent, the server is accepting it but then stumbling when it tries to send it back. |

|

Drongle McMahon

Older than he looks

Join date: 22 Jun 2007

Posts: 494

|

05-13-2009 14:08

My interpretation of all this is that something in the client code that is not shared between the Kakadu and OpenJPEG paths is resulting in inconsistent information being sent to the server. Instead of validating and rejecting the upload as inconsistent, the server is accepting it but then stumbling when it tries to send it back. |

|

Omei Turnbull

Registered User

Join date: 19 Jun 2005

Posts: 577

|

05-13-2009 15:23

If it's related to the effects discussed in the earlier jira, it may not be the server at all, but the client assuming it has all the data it needs before it really has. There is a whole lot of stuff about how the client decides when it has finished downloading or retrieving from cache, and how this can lead to incomplete retrieval with images whose data is larger than expected. Too complicated for me to grasp the details (yet). Maybe this was fixed for the larger sizes only. As far as I can tell, the only way the server could actually determine exactly how many bytes it would need to send for a certain number of quality layers would be to parse the JPEG2000 data stream. The data stream doesn't have explicit layer markers, so the parsing would have to understand a lot about the stream format. I'm guessing that the actual code the server uses to decide how much data to send for each LOD involves some rough empirical heuristics. (The robustness of JPEG2000 allows the decoder to make good use of almost all the data it gets, regardless of where it is truncated.) If so, the Lindens are probably continually re-tweaking these heuristics. To me, the most interesting observation is that there doesn't seem to be the same gross truncation problem with small textures uploaded by OpenJPEG. If anyone discovers a counterexample to this, please let me know. Otherwise, I will continue on my current tack of comparing the two compressed streams, looking for a plausible explanation of what the relevant difference is. |

|

Drongle McMahon

Older than he looks

Join date: 22 Jun 2007

Posts: 494

|

05-13-2009 16:54

To me, the most interesting observation is that there doesn't seem to be the same gross truncation problem with small textures uploaded by OpenJPEG. If anyone discovers a counterexample to this, please let me know. Otherwise, I will continue on my current tack of comparing the two compressed streams, looking for a plausible explanation of what the relevant difference is.  |

|

Omei Turnbull

Registered User

Join date: 19 Jun 2005

Posts: 577

|

05-13-2009 18:08

Thanks, Drongle. That disproves my theory that OpenJPEG-encoded images are in general immune to the problem.

|

|

Aminom Marvin

Registered User

Join date: 31 Dec 2006

Posts: 520

|

05-13-2009 21:20

Thanks, Drongle. That disproves my theory that OpenJPEG-encoded images are in general immune to the problem. OpenJpg isn't immune to the main problem (lossless bug), but are more resilient to it, as openjpg seems to be better at compressing sculpts losslessly. |